Background¶

The dataset of interest here is the training dataset of the Loan Prediction Problem Dataset, which can be found on Kaggle's publicly available repository. The goal of this project is to evaluate the Logistic Regression prediction model on Loan Prediction training dataset, fine-tune the model on the validation dataset, and use appropriate and accurate diagnotics for the data.

Data Dictionary:

- Loan_ID: Unique Loan ID

- Gender: Male/Female

- Married: Applicant married Y/N

- Dependents: Number of dependents

- Education: Graduate/Undergrad

- Self_Employed: Y/N

- ApplicantIncome: Applicant Income

- CoapplicantIncome: Coapplicant Income

- LoanAmount: Loan amount in thousands

- Loan_Amount_Term: Term of loan in months

- Credit_History: 1 for meeting the guidelines, 0 for not meeting the guidelines

- Property_Area: Urban/Semi Urban/Rural

- Loan_Status: Loan approved Y/N

Loading Dataset¶

import pandas as pd

import numpy as np

from collections import Counter

from pandas import DataFrame, Series

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import confusion_matrix

from sklearn.metrics import precision_score

from sklearn.metrics import recall_score

from sklearn.model_selection import GridSearchCV

from sklearn.model_selection import RepeatedStratifiedKFold

df = pd.read_csv("loan_train.csv")

df

Data Cleaning¶

df.info()

# drop Loan_ID column

df = df.drop(['Loan_ID'], axis = 1)

# Identify the columns with null values

df.isnull().sum()

# Fill in all null values appropriately

for col in df.columns:

df[col].fillna(df[col].mode()[0], inplace = True)

df['LoanAmount'].fillna(df['LoanAmount'].mean(), inplace = True)

df.isnull().sum()

# One-hot encoding

code_numeric = {'Male':1, 'Female':2,

'Yes': 1, 'No':2,

'Graduate':1, 'Not Graduate':2,

'Urban':1, 'Semiurban':2, 'Rural':3,

'Y':1, 'N':0,

'3+':3 }

df = df.applymap(lambda i: code_numeric.get(i) if i in code_numeric else i)

df['Dependents'] = pd.to_numeric(df.Dependents)

df.info()

# shuffle the dataset as all records should be independent of each other

df.sample(frac = 1)

# separating features and target

y = df['Loan_Status']

X = df.drop(['Loan_Status'], axis = 1)

# Split the dataset at 60/20/20 ratio

N = len(X)

X_train = X[:3*N//5]

X_validation = X[3*N//5:4*N//5]

X_test = X[4*N//5:]

y_train = y[:3*N//5]

y_validation = y[3*N//5:4*N//5]

y_test = y[4*N//5:]

len(X),len(X_train), len(X_validation), len(X_test)

len(y),len(y_train), len(y_validation), len(y_test)

Prediction Model¶

# Logistic Regression model

model = LogisticRegression(max_iter = 4000)

model.fit(X_train, y_train)

print('Model Score with all features: \n', model.score(X_train, y_train))

print('Coefficient: \n', model.coef_)

print('Intercept: \n', model.intercept_)

coeff = DataFrame(X_train.columns)

coeff['Coefficient Estimates'] = Series(model.coef_.flatten())

coeff.columns = ['Features','Coefficient Estimates' ]

coeff

Model Evaluation¶

# getting the probabilities of whether an event is happening or not

# for each observations in X_train

prob = model.predict_proba(X_train)

# obtaining the probabilities of event happening for all observations

p_x_val = prob[:,1]

p_x_val

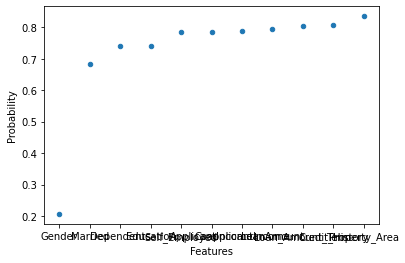

tbl_p = DataFrame(X_train.columns)

tbl_p['Probability'] = Series(p_x_val.flatten())

tbl_p.columns = ['Features','Probability' ]

tbl_p['Probability'] = sorted(tbl_p['Probability'])

tbl_p

ax = tbl_p.plot.scatter(x = 'Features', y = 'Probability')

# The actual prediction of the target variable for X_train

y_train_pred = model.predict(X_train)

y_train_pred

# actual y_train values

np.array(y_train)

print('Actual y_train values: \n', Counter(y_train))

print('Predicted y_train values: \n', Counter(y_train_pred))

confusion_matrix(y_train, y_train_pred)

# Precision = TP/(TP+FP) for training dataset

Precision = 247/(247+72)

Precision

# Recall = TP/(TP+FN) for training dataset

Recall = 247/(247+6)

Recall

Searching the Key Hyperparameters¶

solvers = ['newton-cg','lbfgs','liblinear']

penalty = ['l2']

c_val = [100, 10, 1.0, 0.1, 0.01]

grid = dict(solver = solvers, penalty = penalty, C = c_val)

cv = RepeatedStratifiedKFold(n_splits = 10, n_repeats = 3, random_state = 1)

grid_search = GridSearchCV(estimator = model, param_grid = grid, n_jobs = -1, cv = cv,scoring = 'accuracy', error_score = 0)

grid_result = grid_search.fit(X_validation, y_validation)

print("Best: %f using %s" %(grid_result.best_score_, grid_result.best_params_))

means = grid_result.cv_results_['mean_test_score']

stds = grid_result.cv_results_['std_test_score']

params = grid_result.cv_results_['params']

for mean, stdev, param in zip(means, stds, params):

print("%f (%f) with %r" % (mean, stdev, param))

Fine-tuning the Model¶

new_model = LogisticRegression(penalty ='l2',C = 100, solver = 'newton-cg').fit(X_train,y_train)

print("Old model score on Training set: \n", model.score(X_train, y_train))

print("New model score after tuning hyperparameter: \n", new_model.score(X_train, y_train))

y_test_old = model.predict(X_test)

y_test_new = new_model.predict(X_test)

Model Diagnotics¶

print('Actual y_test values: \n', Counter(y_test))

print('Predicted y_test values with old model: \n', Counter(y_test_old))

print('Predicted y_test values with new model: \n', Counter(y_test_new))

print("Precision score for old model: \n",precision_score(y_test, y_test_old))

print("Recall score for old model:\n", recall_score(y_test, y_test_old))

print("Precision score for fine-tuned model: \n",precision_score(y_test, y_test_new))

print("Recall score for fine-tuned model:\n", recall_score(y_test, y_test_new))

Conclusion¶

The default Logistic Regression model works well in training dataset, but in order to avoid overfitting, which will cause relatively more inaccuracy in prediction, it is bests to split up the training dataset and fine-tune the model on a validation dataset. After fine-tuning the hyperparameter, not only the model score improved, but also the precision score and recall score.